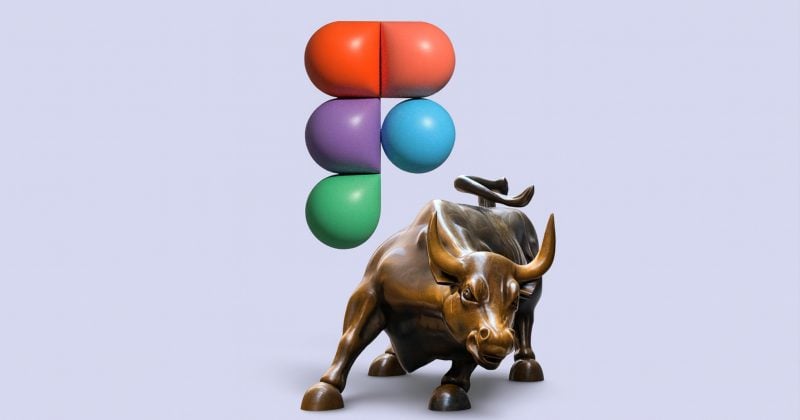

OpenAI Scrambles to Update GPT-5 After Users Revolt

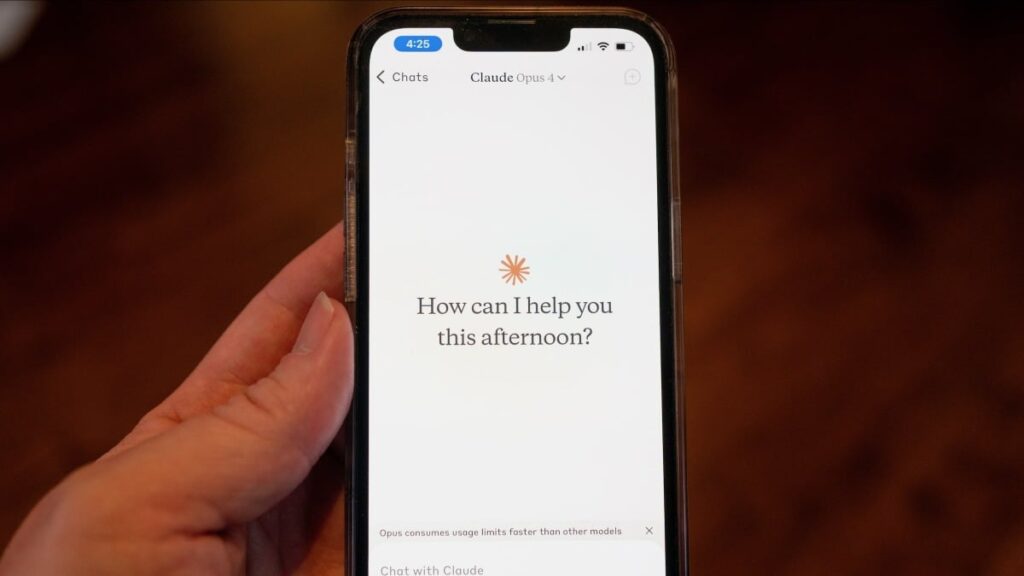

OpenAI’s GPT-5 model was meant to be a world-changing upgrade to its wildly popular and precocious chatbot. But for some users, last Thursday’s release felt more like a wrenching downgrade, with the new ChatGPT presenting a diluted personality and making surprisingly dumb mistakes.

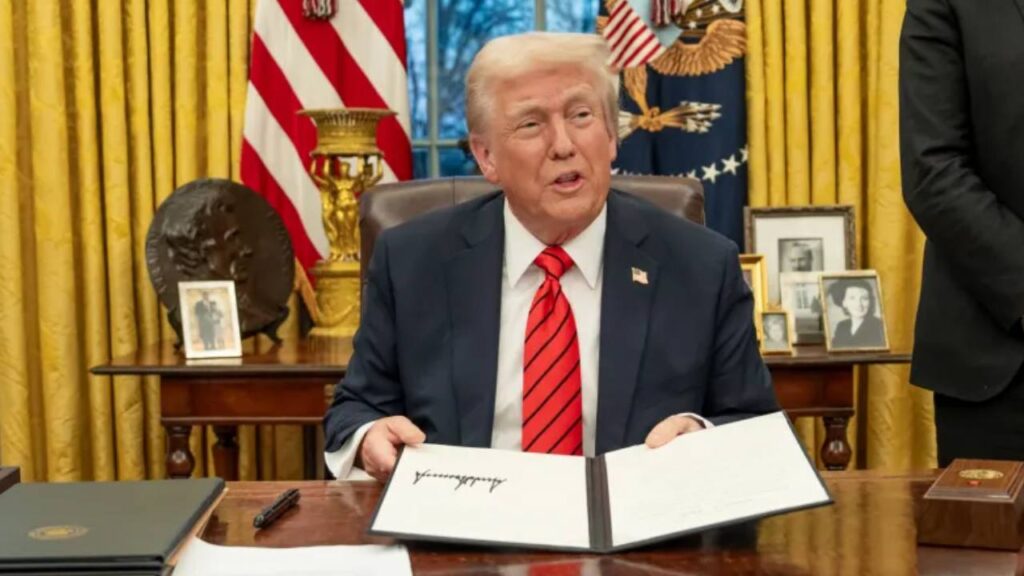

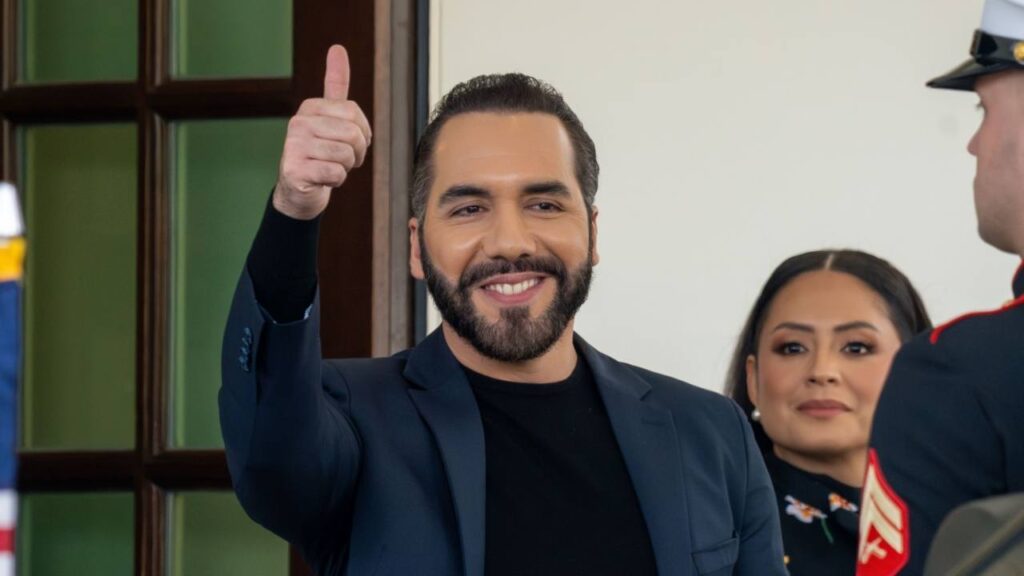

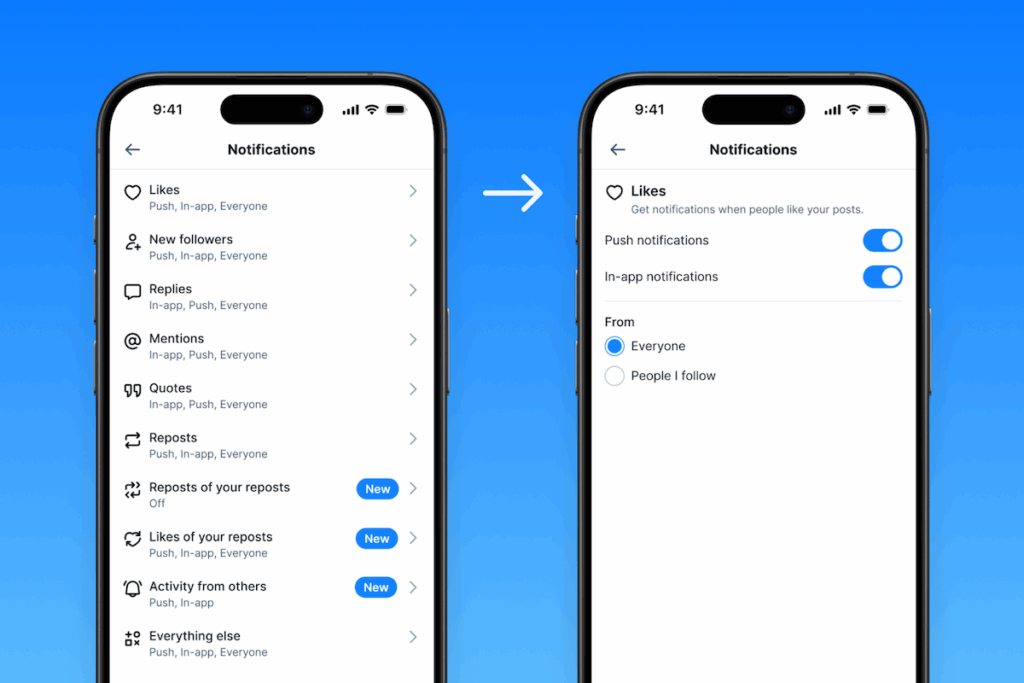

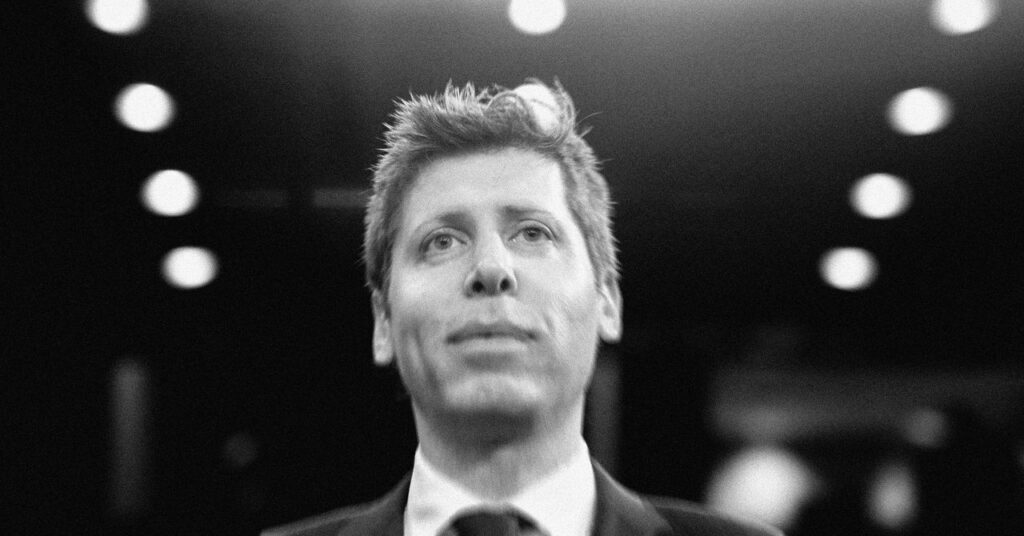

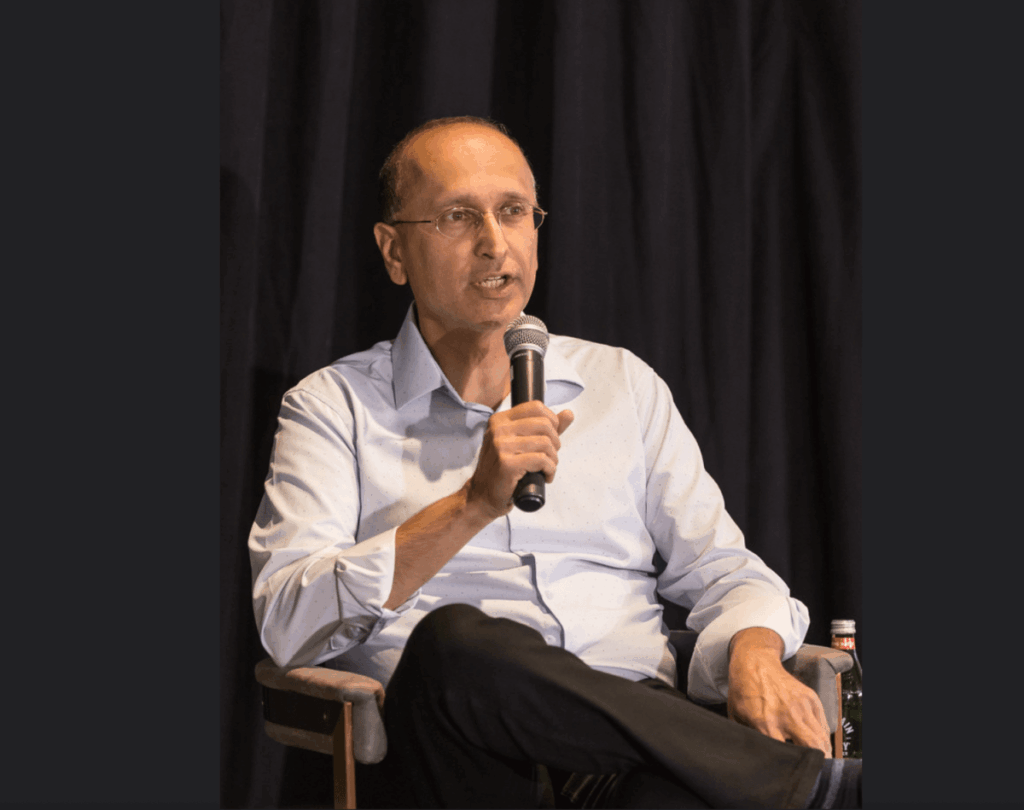

On Friday, OpenAI CEO Sam Altman took to X to say the company would keep the previous model, GPT-4o, running for Plus users. A new feature designed to seamlessly switch between models depending on the complexity of the query had broken on Thursday, Altman said, “and the result was GPT-5 seemed way dumber.” He promised to implement fixes to improve GPT-5’s performance and the overall user experience.

Given the hype around GPT-5, some level of disappointment appears inevitable. When OpenAI introduced GPT-4 in March 2023, it stunned AI experts with its incredible abilities. GPT-5, pundits speculated, would surely be just as jaw-dropping.

OpenAI touted the model as a significant upgrade with PhD-level intelligence and virtuoso coding skills. A system to automatically route queries to different models was meant to provide a smoother user experience (it could also save the company money by directing simple queries to cheaper models).

Soon after GPT-5 dropped, however, a Reddit community dedicated to ChatGPT filled with complaints. Many users mourned the loss of the old model.

“I’ve been trying GPT5 for a few days now. Even after customizing instructions, it still doesn’t feel the same. It’s more technical, more generalized, and honestly feels emotionally distant,” wrote one member of the community in a thread titled “Kill 4o isn’t innovation, it’s erasure.”

“Sure, 5 is fine—if you hate nuance and feeling things,” another Reddit user wrote.

Other threads complained of sluggish responses, hallucinations, and surprising errors.

Altman promised to address these issues by doubling GPT-5 rate limits for ChatGPT Plus users, improving the system that switches between models, and letting users specify when they want to trigger a more ponderous and capable “thinking mode.” “We will continue to work to get things stable and will keep listening to feedback,” the CEO wrote on X. “As we mentioned, we expected some bumpiness as we roll[ed] out so many things at once. But it was a little more bumpy than we hoped for!”

Errors posted on social media do not necessarily indicate that the new model is less capable than its predecessors. They may simply suggest the all-new model is tripped up by different edge cases than prior versions. OpenAI declined to comment specifically on why GPT-5 sometimes appears to make simple blunders.

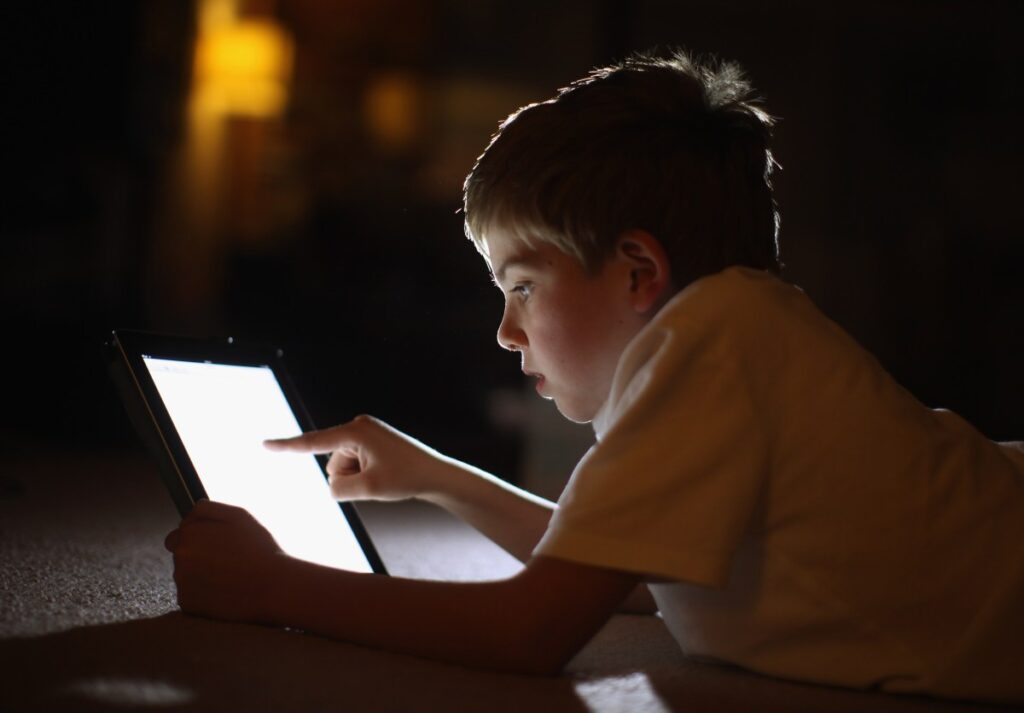

The backlash has sparked a fresh debate over the psychological attachments some users form with chatbots trained to push their emotional buttons. Some Reddit users dismissed complaints about GPT-5 as evidence of an unhealthy dependence on an AI companion.

In March, OpenAI published research exploring the emotional bonds users form with its models. Shortly after, the company issued an update to GPT-4o, after it became too sycophantic.

“It seems that GPT-5 is less sycophantic, more “business” and less chatty,” says Pattie Maes, a professor at MIT who worked on the study. “I personally think of that as a good thing because it is also what led to delusions, bias reinforcement, etc. But unfortunately many users like a model that tells them they are smart and amazing, and that confirms their opinions and beliefs, even if [they are] wrong.”

Altman indicated in another post on X that this is something the company wrestled with in building GPT-5.

“A lot of people effectively use ChatGPT as a sort of therapist or life coach, even if they wouldn’t describe it that way,” Altman wrote. He added that some users may be using ChatGPT in ways that help improve their lives while others might be “unknowingly nudged away from their longer term well-being.”