Tech Firms Concerned About Aug. 2 Deadline

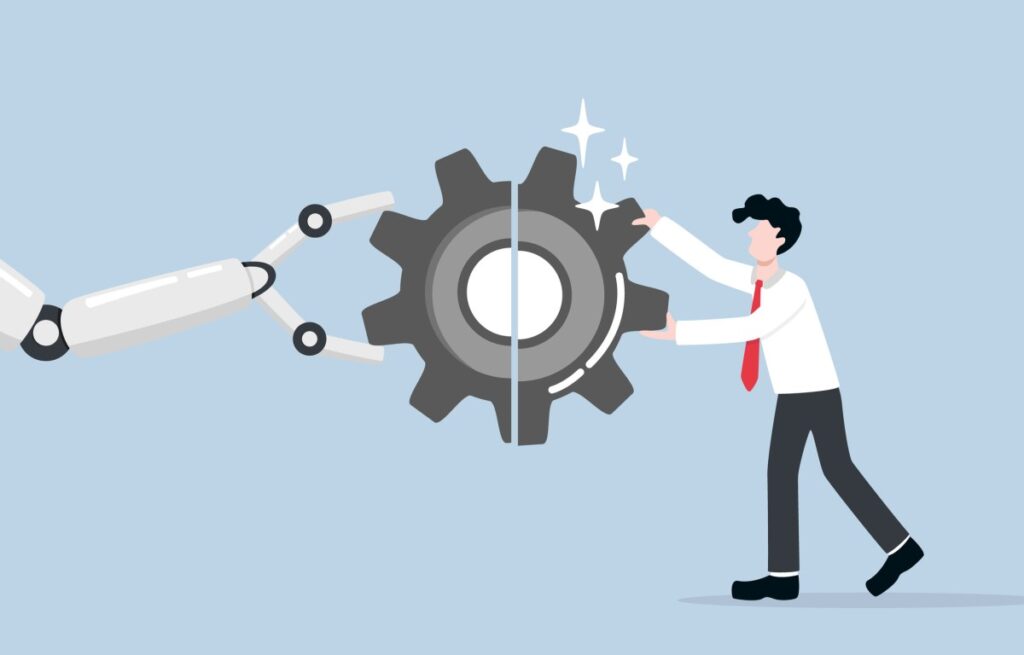

From Aug. 2, 2025, providers of general-purpose artificial intelligence (GPAI) models in the European Union must comply with key provisions of the EU AI Act. Requirements include maintaining up-to-date technical documentation and summaries of training data.

The AI Act outlines EU-wide measures aimed at ensuring that AI is used safely and ethically. It establishes a risk-based approach to regulation that categorises AI systems based on their perceived level of risk to and impact on citizens.

As the deadline approaches, legal experts are hearing from AI providers that the legislation lacks clarity, opening them up to potential penalties even if they intend to comply. Some of the requirements also threaten innovation in the bloc by asking too much of tech startups, but the legislation does not have any real focus on mitigating the risks of bias and harmful AI-generated content.

Oliver Howley, partner in the technology department at law firm Proskauer, spoke to TechRepublic about these shortcomings. “In theory, 2 August 2025 should be a milestone for responsible AI,” he said in an email. “In practice, it’s creating significant uncertainty and, in some cases, real commercial hesitation.”

Unclear legislation exposes GPAI providers to IP leaks and penalties

Behind the scenes, providers of AI models in the EU are struggling with the legislation as it “leaves too much open to interpretation,” Howley told TechRepublic. “In theory, the rules are achievable…. but they’ve been drafted at a high level and that creates genuine ambiguity.”

The Act defines GPAI models as having “significant generality” without clear thresholds, and that providers must publish “sufficiently detailed” summaries of the data used to train their models. The ambiguity here creates an issue, as disclosing too much detail could “risk revealing valuable IP or triggering copyright disputes,” Howley said.

Some of the opaque requirements pose unrealistic standards, too. The AI Code of Practice, a voluntary framework that tech companies can sign up to implement and comply with the AI Act, instructs GPAI model providers to filter websites that have opted out of data mining from their training data. Howley said this is “a standard that’s difficult enough going forward, let alone retroactively.”

It is also unclear who is obliged to abide by the requirements. “If you fine-tune an open-source model for a specific task, are you now the ‘provider’?” Howley said. “What if you just host it or wrap it into a downstream product? That matters because it affects who carries the compliance burden.”

Indeed, while providers of open-source GPAI models are exempt from some of the transparency obligations, this is not true if they pose “systemic risk.” In fact, they have a different set of more rigorous obligations, including safety testing, red-teaming, and post-deployment monitoring. But since open-sourcing allows unrestricted use, tracking all downstream applications is nearly impossible, yet the provider could still be held liable for harmful outcomes.

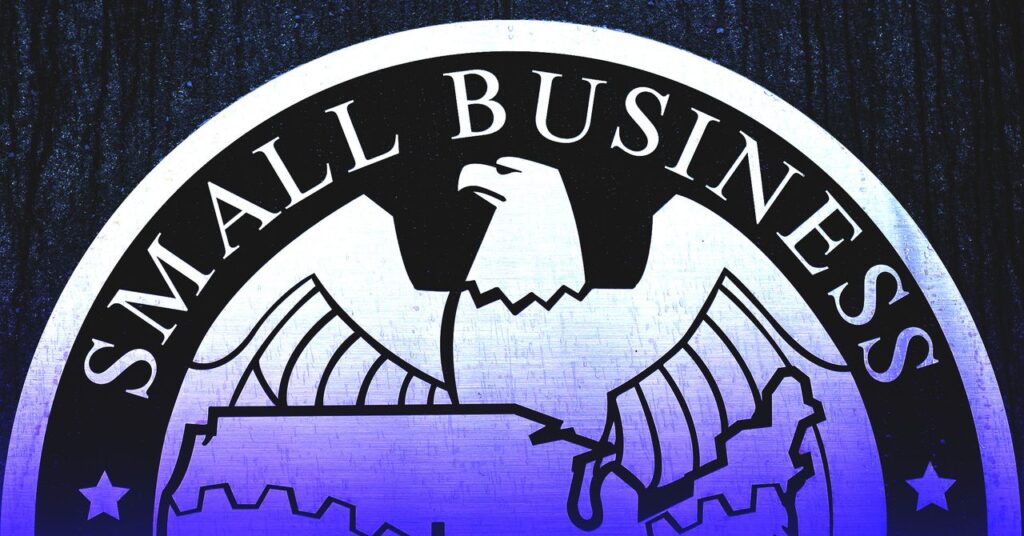

Burdensome requirements could have a disproportionate impact on AI startups

“Certain developers, despite signing the Code, have raised concerns that transparency requirements could expose trade secrets and slow innovation in Europe,” Howley told TechRepublic. OpenAI, Anthropic, and Google have committed to it, with the search giant in particular expressing such concerns. Meta has publicly refused to sign the Code in protest of the legislation in its current form.

“Some companies are already delaying launches or limiting access in the EU market – not because they disagree with the objectives of the Act, but because the compliance path isn’t clear, and the cost of getting it wrong is too high.”

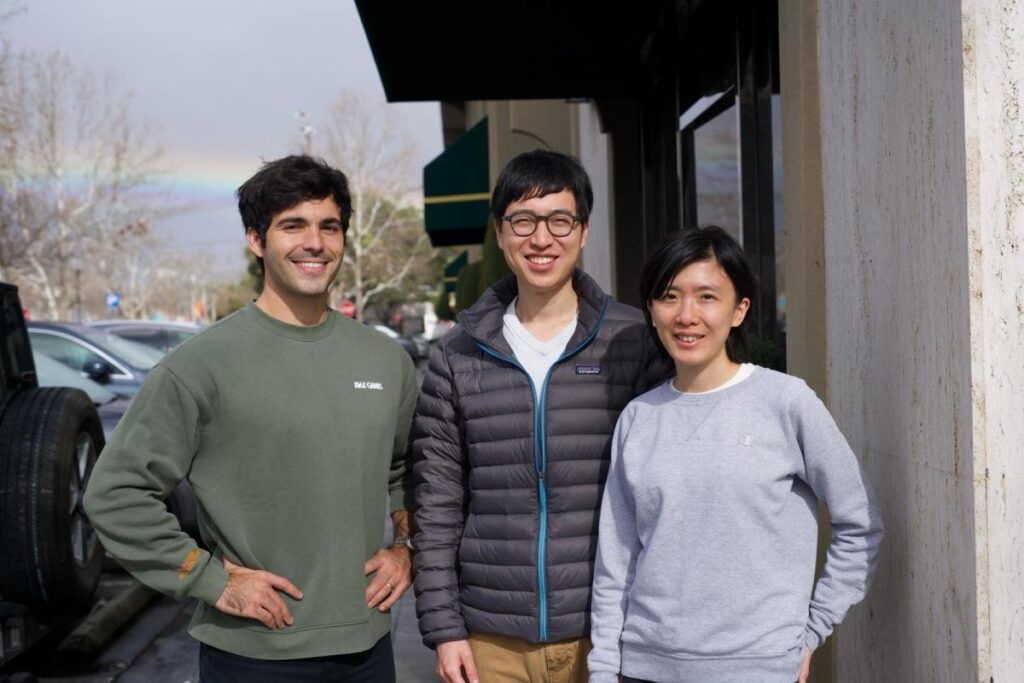

Howley said that startups are having the hardest time because they don’t have in-house legal support to help with the extensive documentation requirements. These are some of the most essential companies when it comes to innovation, and the EU recognises this.

“For early-stage developers, the risk of legal exposure or feature rollback may be enough to divert investment away from the EU altogether,” he added. “So while the Act’s objectives are sound, the risk is that its implementation slows down precisely the kind of responsible innovation it was designed to support.”

A possible knock-on effect of quashing the potential of startups is rising geopolitical tensions. The US administration’s vocal opposition to AI regulation clashes with the EU’s push for oversight, and could strain ongoing trade talks. “If enforcement actions begin hitting US-based providers, that tension could escalate further,” Howley said.

Act has very little focus on preventing bias and harmful content, limiting its effectiveness

While the Act has significant transparency requirements, there are no mandatory thresholds for accuracy, reliability, or real-world impact, Howley told TechRepublic.

“Even systemic-risk models aren’t regulated based on their actual outputs, just on the robustness of the surrounding paperwork,” he said. “A model could meet every technical requirement, from publishing training summaries to running incident response protocols, and still produce harmful or biased content.”

What rules come into effect on August 2?

There are five sets of rules that providers of GPAI models must ensure they are aware of and are complying with as of this date:

Notified bodies

Providers of high-risk GPAI models must prepare to engage with notified bodies for conformity assessments and understand the regulatory structure that supports those evaluations.

High-risk AI systems are those that pose a significant threat to health, safety, or fundamental rights. They are either: 1. used as safety components of products governed by EU product safety laws, or 2. deployed in a sensitive use case, including:

- Biometric identification

- Critical infrastructure management

- Education

- Employment and HR

- Law enforcement

GPAI models: Systemic risk triggers stricter obligations

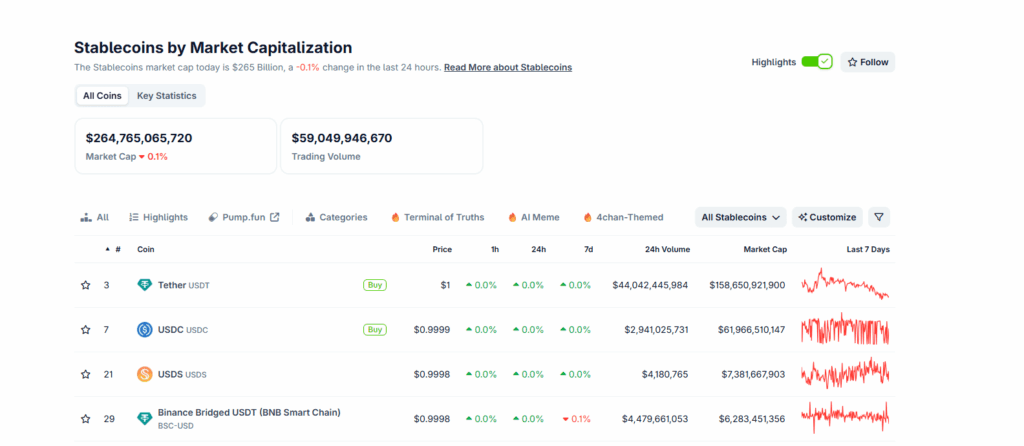

GPAI models can serve multiple purposes. These models pose “systemic risk” if they exceed 1025 floating-point operations executed per second (FLOPs) during training and are designated as such by the EU AI Office. OpenAI’s ChatGPT, Meta’s Llama, and Google’s Gemini fit these criteria.

All providers of GPAI models must have technical documentation, a training data summary, a copyright compliance policy, guidance for downstream deployers, and transparency measures regarding capabilities, limitations, and intended use.

Providers of GPAI models that pose systemic risk must also conduct model evaluations, report incidents, implement risk mitigation strategies and cybersecurity safeguards, disclose energy usage, and carry out post-market monitoring.

Governance: Oversight from multiple EU bodies

This set of rules defines the governance and enforcement architecture at both the EU and national levels. Providers of GPAI models will need to cooperate with the EU AI Office, European AI Board, Scientific Panel, and National Authorities in fulfilling their compliance obligations, responding to oversight requests, and participating in risk monitoring and incident reporting processes.

Confidentiality: Protections for IP and trade secrets

All data requests made to GPAI model providers by authorities will be legally justified, securely handled, and subject to confidentiality protections, especially for IP, trade secrets, and source code.

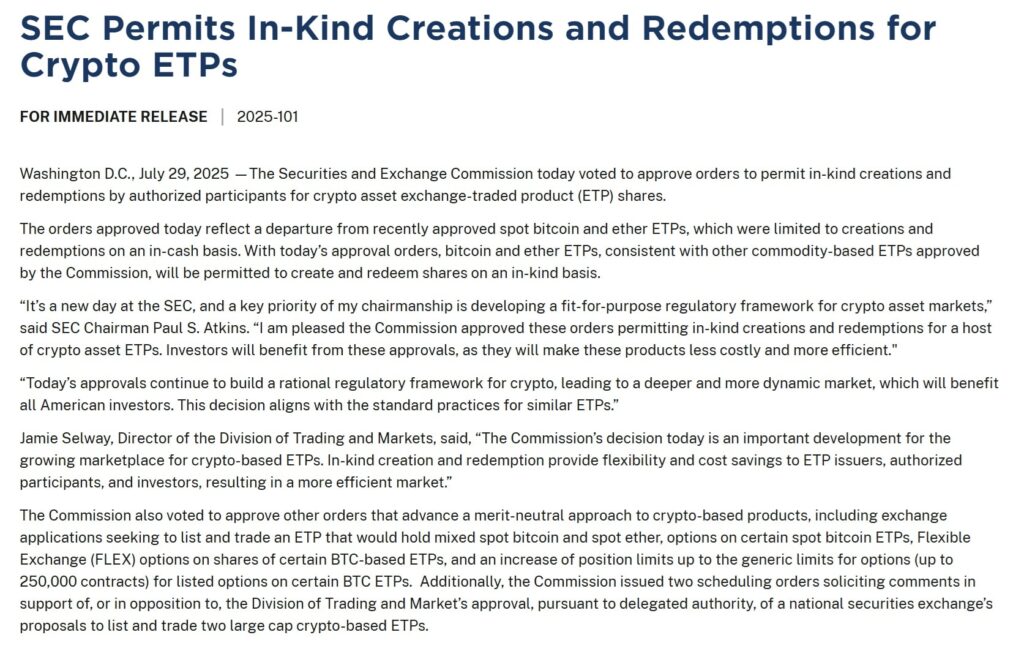

Penalties: Fines of up to €35 million or 7% of revenue

Providers of GPAI models will be subject to penalties of up to €35,000,000 or 7% of their total worldwide annual turnover, whichever is higher, for non-compliance with prohibited AI practices under Article 5, such as:

- Manipulating human behaviour

- Social scoring

- Facial recognition data scraping

- Real-time biometric identification in public

Other breaches of regulatory obligations, such as transparency, risk management, or deployment responsibilities, may result in fines of up to €15,000,000 or 3% of turnover.

Supplying misleading or incomplete information to authorities can lead to fines of up to €7,500,000 or 1% of turnover.

For SMEs and startups, the lower of the fixed amount or percentage applies. Penalties will consider the severity of the breach, its impact, whether the provider cooperated, and whether the violation was intentional or negligent.

While specific regulatory obligations for GPAI model providers begin to apply on August 2, 2025, a one-year grace period is available to come into compliance, meaning there will be no risk of penalties until August 2, 2026.

When does the rest of the EU AI Act come into force?

The EU AI Act was published in the EU’s Official Journal on July 12, 2024, and took effect on August 1, 2024; however, various provisions are applied in phases.

- February 2, 2025: Certain AI systems deemed to pose unacceptable risk (e.g., social scoring, real-time biometric surveillance in public) were banned. Companies that develop or use AI must ensure their staff have a sufficient level of AI literacy.

- August 2, 2026: GPAI models placed on the market after August 2, 2025 must be compliant by this date, as the Commission’s enforcement powers formally begin.

Rules for certain listed high-risk AI systems also begin to apply to: 1. Those placed on the market after this date, and 2. those placed on the market before this date and have undergone substantial modification since. - August 2, 2027: GPAI models placed on the market before August 2, 2025, must be brought into full compliance.

High-risk systems used as safety components of products governed by EU product safety laws must also comply with stricter obligations from now on. - August 2, 2030: AI systems used by public sector organisations that fall under the high-risk category must be fully compliant by this date.

- December 31, 2030: AI systems that are components of specific large-scale EU IT systems and were placed on the market before August 2, 2027, must be brought into compliance by this final deadline.

A group representing Apple, Google, Meta, and other companies urged regulators to postpone the Act’s implementation by at least two years, but the EU rejected this request.